Couger Inc. (Headquarters: Shibuya-ku, Tokyo; CEO: Atsushi Ishii; hereinafter "Couger") has started offering its 3DCG(*1) simulator "Dimension," which allows machine learning AI to be trained in places where humans act, such as streets and commercial facilities. We have started accepting applications from the general public. Honda R&D Co.,Ltd.( Headquarters: Minato-ku, Tokyo; President: Takahiro Hachigo; hereinafter "Honda R&D") has already introduced this technology and is using it for their research.

Background

While robots, drones, and self-driving cars are certain to be a part of people's daily lives in the near future, most of the current AI training is done in simulations of very basic conditions such as buildings and roads, or without reflecting human behavior. For practical use, there are a vast number of spatial patterns in which people act, and it is important to cover all of them for AI training, but it is impossible to reproduce all of them in real space. With Dimension, it is possible to easily reproduce any space in which humans act and have AI learn from it.

Dimension Features

Differences from Existing Simulators

- The system incorporates not only fixed assets such as roads and buildings, but also human models that act in that space.

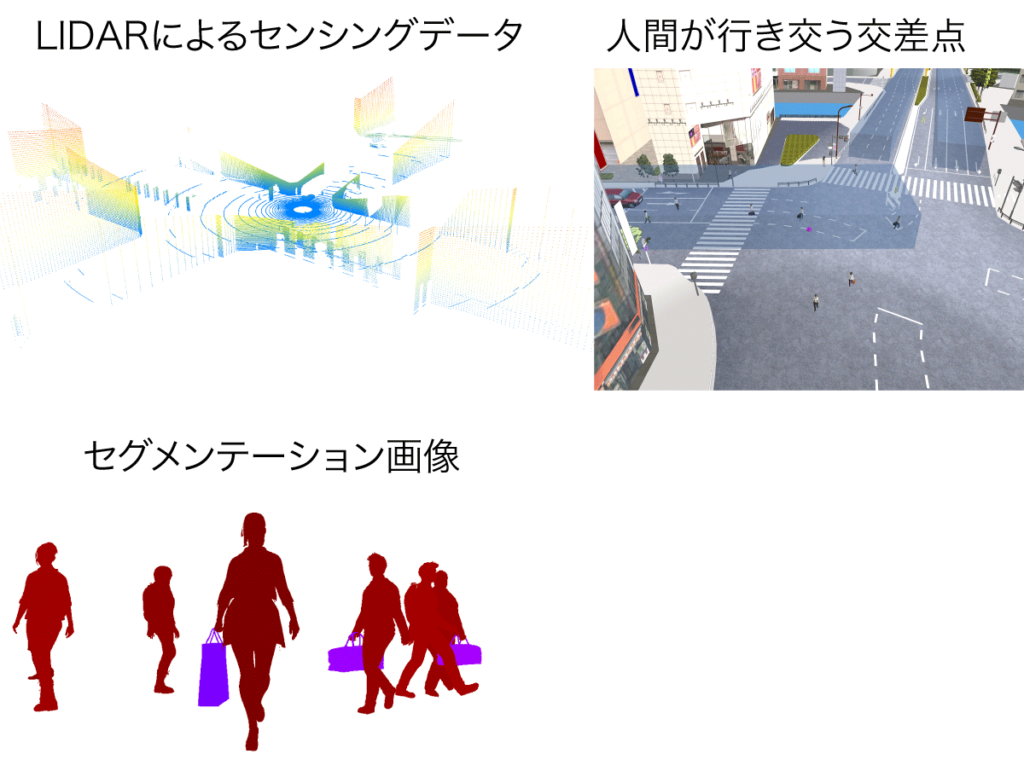

- LIDAR(*2) sensing data can also be generated. LIDAR data is a rendering of points, so there is no difference between sensed 3DCG and sensed reality, and learning is possible. It also eliminates the need to spend huge amounts of money to bring 3DCG closer to reality.

- While most existing simulators are designed to "test" AI that has been developed, Dimension is a simulator that can be used as training for more advanced advances in machine learning. In terms of human learning, it can serve as both a textbook and an exam question.

Difference from learning by real-life images

Reproducing the situations you want AI to learn in real space is like filming a movie with extras. However, AI learning requires a myriad of situational patterns, and it is impossible to reproduce all of these in the real world.

With Dimension, for example, if you want to create a situation in a city like Shibuya with 50 people walking in front, 10 on the right, 20 on the left, and 30 in the back, you can easily reproduce the situation.

You can determine the general movements of the human model AI with a certain degree of freedom of action. For example, you can have dozens of people walking back and forth while automatically avoiding collisions between human models. This is an important point because it takes a lot of time to set up all the movements, and if they are all random, it is impossible to reproduce the situation you want the AI to learn.

In the case of live-action images, it is time-consuming for the AI to learn to segment objects (e.g., "this is a human," "this is a tree," etc.) and set object depths afterward, but this process is unnecessary with Dimension.

Our Unique Technology

Creating a dynamic model of a human being is much more complex and requires much more advanced technology than that of a road or a building. Couger, which has been involved in game development since its inception, developed "Dimension" by leveraging its knowledge gained from developing character AI that moves autonomously in the game space. As a result, the people who appear in "Dimension" are not merely people in appearance, but "characters'' that move in a human-like manner.

(Example of learning application)

- Self-driving cars drive through intersections with many humans present

- Robot understands and acts on human situations in spaces such as offices and schools

- A robot carrying luggage in a shopping mall moves so as not to bump into people

- Unmanned self-driving cabs stop in front of people raising their hands on the sidewalk, etc.

Basic Functions

- Training data generation for machine learning: RGB images, segmentation images, depth images, LIDAR point cloud data

- Background image synthesis: Composite 3D models of people and other objects onto arbitrary images

- 3D model background asset: Urban data

- Continuous data generation: Generate continuous data by arbitrarily moving people, vehicles, and cameras to create time series data

- Action scenario tool: Scenario tool for creating continuous data of actions

- Person control AI: Collision avoidance, road recognition

- Random generation and placement of people and environment: Mass production of data by randomly generating and placing people and environment such as lighting

- Person customization function: Customization of person body shape, hair color, clothing color, and skin tone

- Baggage for person: Customize color and appearance of bags and other carryable items

- Various motions for people

*1 3DCG: 3D computer graphics

*2 LIDAR: A sensing method that measures the distance to a target by irradiating a laser beam onto the target and measuring the reflected light.

About “LUDENS”

Combining AI, IoT, AR and Blockchain, we are developing our proprietary technology "LUDENS" to make space smart.

Web : https://couger.co.jp/

![[Great Success] Blockchain event held in New York City.](https://couger.co.jp/news-en/wp-content/uploads/2023/03/ny-200x200.jpg)