![[Invited Lecture at Stanford University] Couger Announces Development of Virtual Human Agent](https://couger.co.jp/news-en/wp-content/uploads/2020/11/sf9-min-1024x768-1.png)

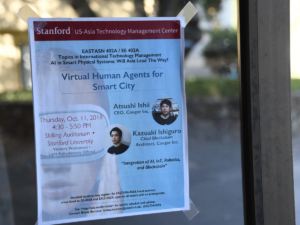

Couger to deliver Virtual Human Agent lecture at Stanford University

Professor Richard of the US Asia Technology Management Center at Stanford University welcomed Ishii and Ishiguro of Couger Corporation on October 11, 2018 to describe the current state of the Ludens project that we are working on. This time, we want to discuss the presentation's content.

- Couger Invited to World-Renowned Stanford University

- What is the US-Asia Technology Management Centre for Cutting-Edge Research?

- Couger's lecture at Stanford University | Virtual Human Agent

- [Stanford 1] Presentation by Atsushi Ishii, CEO of Couger

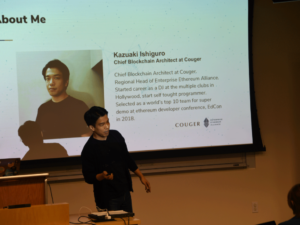

- [Stanford 2] Presentation by Kazuaki Ishiguro, Chief Blockchain Architect at Couger

- Summary

1. Couger invited to Stanford University, one of the most prestigious universities in the world

Stanford University(www.stanford.edu)is a historic university located between San Francisco and San Jose, and is arguably the heart of Silicon Valley both academically and geographically. The atmosphere of the school motto "Die Luft der Freiheit weht" (German: The winds of freedom blows) can still be felt in this spacious, free, bright and relaxed campus.

With an acceptance rate of 4.7% (as of 2017), Stanford University is the most difficult university to get into in the United States, according to the Times Higher Education World University Rankings for 2018. Oxford University is ranked No. 1, Cambridge University is ranked No. 2, and Stanford University is ranked No. 3 (tied for third with Caltech).

Students can be found studying in various places on campus, including the spacious campus café and garden.

There are countless notable alumni on the list.

In the field of information technology, the school has graduated 18 astronauts, as well as Vinton Cerf, the inventor of the Internet, Larry Page and Sergey Brin of Google, David Filo and Jay Yang of Yahoo, John McCarthy of artificial intelligence research and the creator of the LISP programming language, and Martin Hellman of public encryption keys.

Stanford University has conducted various studies and projects.

ARPANET (Advanced Research Projects Agency Network), one of the world's first operational packet communications computer network nodes, was installed at the Stanford Artificial Intelligence Laboratory (SAIL) (https://www. sri.com/). Stanford also invented the process for synthesizing FM sound sources for radio. Today, it continues to create a large number of business founders in the computing industry. It is no exaggeration to state that Stanford is one of the most prestigious universities to have shaped American and possibly global industry.

2. What is the US-Asia Technology Management Centre for Cutting-Edge Research?

The US-Asia Technology Management Centre (US-ATM) is an education and research center established at Stanford University in 1992 to manage US-Japan technology. Its activities include organizing public lecture series and seminars, supporting research projects, establishing new university courses, and web projects.

The institute's research programme examines cutting-edge findings from an international strategic technology management perspective. As part of Professor Richard Dasher's AI seminar series, I was invited to visit this research facility to speak to Stanford students about Couger's Ludens.

Couger's lecture at Stanford University | Virtual Human Agent

CEO Ishii and Chief Blockchain Architect Ishiguro, from Couger, were invited as a speaker at this presentation. Please visit the Stanford University website to view the demonstration's slideshow and video.

We will briefly introduce the contents of the presentations.

[Stanford Presentation 1] Presentation by Atsushi Ishii, CEO of Couger

He began with an overview of Ludens, a new interface project being developed by Couger to connect AI x AR x Blockchain and society.

In the coming loT era, everything around us such as cars, drones, airplanes, home appliances, and robots are expected to be connected through autonomous technology. We are developing a Virtual Human Agent (VHA) as one of the interfaces to realize autonomous technology.

A Virtual Human Agent is a character in disguise with senses such as sight, hearing, emotion, and intelligence. They can respond to images and situations in the real world, events, operate home appliances, and think and communicate as if they were human beings.

Ishii discussed the potential for expanding the use of Virtual Human Agents for various applications in the future by making the interface resemble that of a person, thereby facilitating interaction between the user and the virtual human agent. He used RACHEL, a virtual human agent he is currently developing, as an example. He discussed the potential for expanding the usage of virtual human beings in the future for a variety of purposes.

Currently, there is a lot of interest in the development of in-vehicle applications," he stated. Based on the conversations of the people in the car, the agent can make suggestions for purchasing drinks, selecting and suggesting music to play in the car, and so on, just like an attentive friend who makes the interior space and the drive more comfortable.

Virtual human agents make comprehensive judgments based on images, voice, past history and execution, and implement proposals that make people's actions and lives more comfortable.

It is not a one-way command to Alexa or Siri to do this or that, but a cooperative function that is uniquely Japanese.

Since the development of virtual human agents is not on a scale that can be done by a single company, we are working on a project that is trying to create a system that will allow developers and creators to participate and share in LUDENS worldwide using the SDK (Software Development Kit) that is currently under development, so that various people can create and use new virtual human agents.

[Stanford Presentation 2] Presentation by Kazuaki Ishiguro, Chief Blockchain Architect at Couger

Chief Blockchain Architect Ishiguro gave a technical presentation on Ludens and Virtual Human Agents. In particular, he explained the reliability of AI and why blockchain is necessary for such projects.

Regarding the reliability of AI, he pointed out that systems such as Alexa, which is currently being marketed, collect data in a centralized manner, and that in the future it will be important to manage data in a decentralized manner, using blockchain.

He also introduced GeneFlow, a project currently being developed by Couger, and explained to the students the importance of building a flow to record the history of AI using blockchain technology.

As for the future development of the project, it is important to build it collaboratively within the global community, and we have already held hackathons and meetups in various parts of the world. The two presented their ideas for a system to sell their products in the LUDENS marketplace.

The students listened intently to their presentation and joined the organizer, Professor Richard, for a three-person discussion session. This was followed by a contentious Q&A with the students. Here are a few of the conversations.

[Stanford Presentation 3] Q&A

Professor :First of all, why did you start Couger in the first place?

Ishii : Couger started out as a game development company. While developing games, we started working on AI and robotics. In the process, I realized that data is very important for AI. From there, I started blockchain and realized that we must use blockchain to protect AI.

Professor : How long have you been in the company and how many people do you have working for you?

Ishii : It has been 10 years since we started making games. Now we have 18 staff members. We have business structures in Tokyo and Singapore, and we also have plans to build offices in Berlin and the U.S. for future global business.

Professor : It is a different device from the famous Prof. Hiroshi Ishiguro of Osaka University. What do you think of his robot (laughs)?

Ishiguro : His robots are limited. The cost is high and updates take time in many cases. I think that once Virtual Human Agents are distributed in the marketplaces, various people will be able to pay tokens to create the best virtual human for them (laughs).

Ishii: I hope that someday, other businesses will produce gear for Virtual Human Agents similar to that in Blade Runner so that we can use it.

Student : Is there a function to make the visuals and characters of the Virtual Human Agent resemble people?

Ishiguro : The ability to resemble humans will have templates that you will be able to customize. For example, my human agent will be able to offer customization such as being afraid of snakes.

Student : Why did you make them human-like instead of an inanimate shape like Alexa?

Ishii : Because I think that by making them look like your favourite game characters or friends, you can interact with them in a more casual way.

Student : Can you name an application where the technical value is high?

Ishii : I think the following use cases are possible.

The first is reception. I think Virtual Human Agents have a lot of potential in stores and restaurants, especially with children.

The second is in cars. The car turns into like your room. It is a function where you have a person with whom you can communicate all the time.

Student : What is the difference between a Virtual Human Agent and a chat robot?

Ishiguro : It is indeed similar to a chat robot. They use similar technology. The point is that Virtual Human Agents can increase conversation, sense background, and read facial expressions for vision.

For example, if you type "I am angry" into a chat robot, the chat robot will respond, "I don't know why you are angry.

The Virtual Human Agent can tell from my facial expressions and movements that I am angry. If I type to the chat robot, "I am not angry," but my face is angry (and the chat robot does not know that). There is a difference here.

Student : For example, there are applications such as automatic car driving. Is the Virtual Human Agent being developed with such applications in mind?

Ishii : I think the first step is to consider use cases that are not familiar with the virtual world. In the car case, Virtual Human Agents will be used in a different way from automatic driving and navigation.

Focus first on the entertainment and amusement aspects, for example bus guides, and then train and add functionality.

Ishiguro : Our first use case was limited. As Ishii mentioned, we will be able to download a lot of data sets in the future when the 5G (5th generation mobile communication system) network is installed.

In the future, when you are in a car, you will be able to talk to a Virtual Human Agent as if there is really someone in front of you or beside you. I think that day is near.

Summary

I was given the opportunity to present the use of virtual human agents in San Francisco by Stanford University. Through a lot of feedback, it was a meaningful time for future development and use cases.

At Couger, we are looking for colleagues who can help us advance the implementation of our technology not only in Japan but also in the global domain!