Couger Inc. (Headquarters: Shibuya-ku, Tokyo; CEO: Atsushi Ishii) announces the launch of its “LUDENS SDK”, which enables the development of Human-like AI assistants in JavaScript. We are also pleased to announce that Mr. Hiroshi Yamakawa, President of Whole Brain Architecture Initiative, has been appointed as an advisor to help develop an AI assistant that more seamlessly connects human and technology communication by incorporating human science.

Human-like AI Assistant LUDENS Background

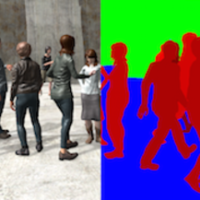

Couger has developed and provided various technologies related to Human-like AI, including game AI technology for KDDI's "Virtual Character xR." This AI learning simulator is specialized for human motion as a learning environment for automated driving technology for the Honda R&D Institute. This was demonstrated through an experiment using a Human-like AI assistant in a shopping mall in collaboration with Information Services Int'l -Dentsu, Ltd. We have now developed the "LUDENS SDK," a development toolkit for Human-like AI assistants. This comes equipped with AI modules such as voice and video recognition and a 3D modeled Virtual Human to realize real-time communication using facial expressions and body language.

Goals of the “LUDENS SDK”

In recent years, the use of anime and video game characters has expanded beyond the film and video game space to a wide range of fields, including character goods and theme parks. Furthermore, the influence of "individuals" such as YouTubers and Instagrammers, both professional and amateur, is increasing.

On the other hand, since the content of stuffed animals, cosplayers, YouTube videos, etc., is human, they tend to be labor-intensive, and the cost of introducing them is too high for companies. The use of character intellectual property such as goods and videos has limitations in terms of rich expressions that match the reactions of the recipient, such as facial expressions and body movements.

The “LUDENS SDK” makes it easy to develop and extend Human-like AI assistants to fit a company's sales objectives and brand. As a result, you can deploy advanced and rich Human-like AI assistants on any device, including tablets and digital signage.

Human-like AI assistants with advanced recognition technology will be used to meet a wide range of needs, including companies suffering from chronic labor shortages in the service and medical fields, companies requiring foreign language support, and companies in the gaming and entertainment industries.

Appointment of Hiroshi Yamakawa as an Advisor

We have invited Mr. Hiroshi Yamakawa, the president of the Whole Brain Architecture Initiative, who is one of the world's leading researchers in general-purpose artificial intelligence, to serve as an advisor in our efforts to create an interface that extends communication between humans and technology. With advice drawn from Mr. Yamakawa's wealth of knowledge and experience, we will continue to refine the Human-like AI assistant to attract people's attention and bring it closer to stress-free conversation.

Comments from Hiroshi Yamakawa

“The Virtual Human Agent (VHA) being developed by Couger is a promising autonomous technology that will have a significant impact on users, making it different from inorganic information terminals. I am pleased to accept the position of advisor because I believe that I can make a small contribution to making VHA's cognition, memory, and emotions more relevant and compatible with human society."

Profile of Hiroshi Yamakawa

Yamakawa graduated from Tokyo University of Science, Faculty of Science, in 1987, and obtained a doctorate in engineering from the University of Tokyo in 1992. He studied reinforcement learning models using neural circuits. After joining Fujitsu Laboratories Ltd. the same year, he engaged in research on concept acquisition, cognitive architecture, educational games, and the Shogi project. He serves as president of the Whole Brain Architecture Initiative, a non-profit organization that promotes the development of general-purpose artificial intelligence with reference to brain architecture.

Features of “LUDENS SDK”

- Programming of Virtual Human behavior is possible with JavaScript.

- 3D modeled Virtual Humans express their facial and physical expressions in real time in response to communication.

- Object Recognition: Virtual Human behavior can be programmed according to the facial expressions and gestures of objects and people recognized by the camera.

- Speech Recognition: Programming of the Virtual Human's behavior in response to the voice recognized by the microphone.

- Emotion Recognition: Emotional values change like humans as they communicate. Programming that combines these emotions with visual and auditory senses is possible.

- External linkage: The ability to link with external APIs allows users to search for weather and web information, send messages to someone, and register a schedule.

”LUDENS SDK” Architecture

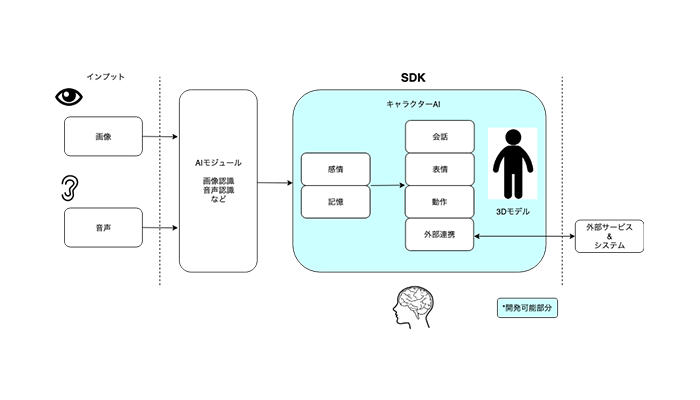

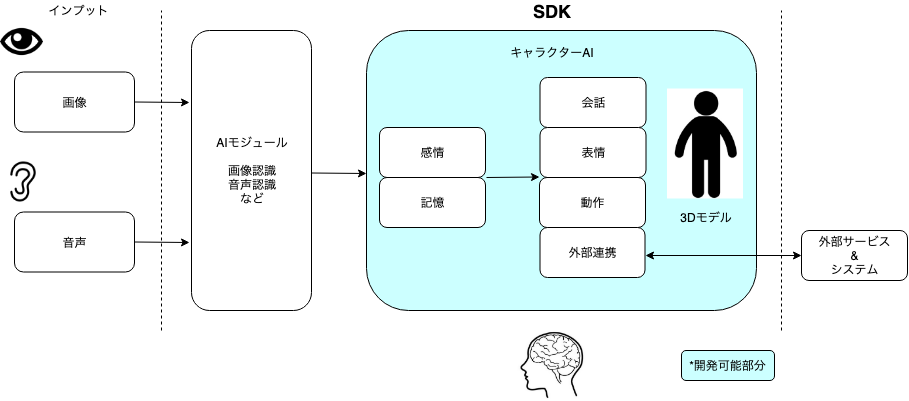

The AI module recognizes input data such as conversations, facial expressions, and body size; the Character AI can control the behavior of the 3D model Human-like AI assistant.

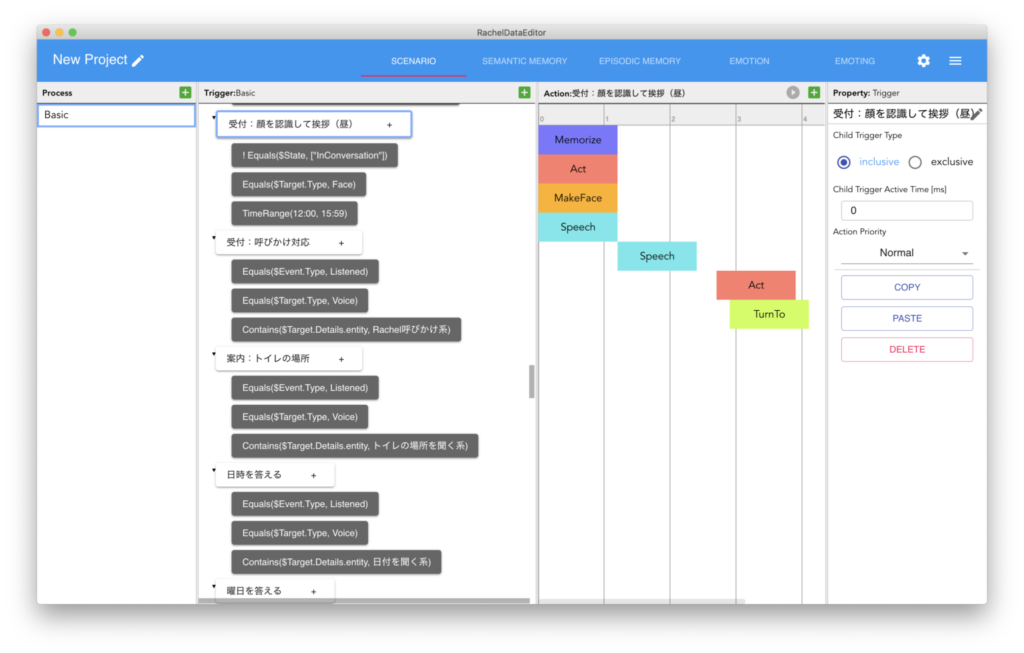

Supports development with JavaScript and GUI tools

In the future, we plan to release a GUI tool that will allow users to customize the behavior of Human-like AI assistants without programming. This will help many users easily adopt Virtual Human.

For more information, please contact us.

![[Great Success] Blockchain event held in New York City.](https://couger.co.jp/news-en/wp-content/uploads/2023/03/ny-200x200.jpg)